Are you polite to ChatGPT? You might want to reconsider

As language models continue to advance, the temptation to anthropomorphize these systems and treat them as human-like entities grows stronger. However, this approach carries significant risks.

One of the most classical anthropological theories revolves around language and how language shapes our worldview. This is called the Shapir-Whorf hypothesis. While large language models are remarkably adept at producing human-like text, they lack the deeper understanding and contextual intelligence that characterizes genuine human communication and reasoning.

I have been deeply interested in how people talk about – and interact with – language models in ways that could be described as treating them as peers, as colleagues, or as people in general. I’ve started several face-to-face and online discussions about this theme, and it seems that there are three ways people describe their motivation for being mindfully polite to LLMs (asking, saying thank you, using social courtesies):

The potential of AI overlords

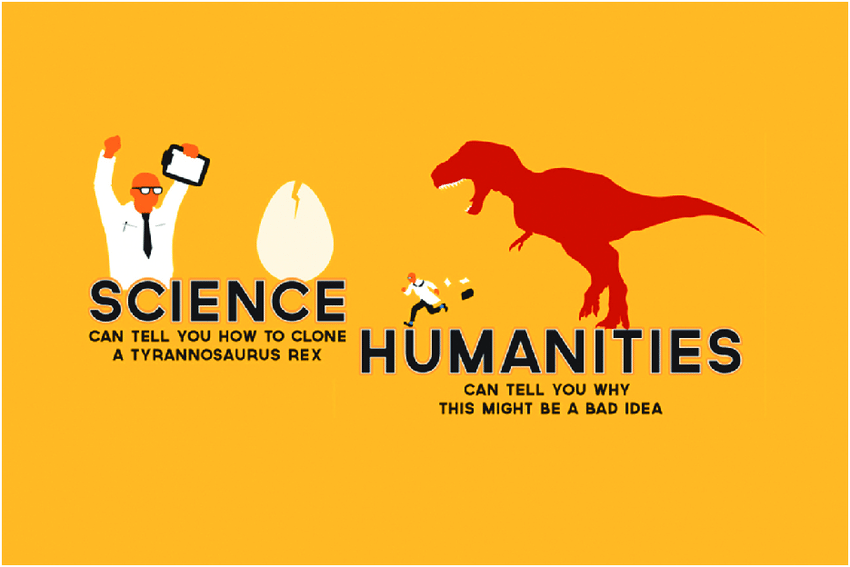

While this reason is often loaded with sarcasm, plenty of studies have been conducted about how people perceive AI and trust algorithmic systems. Being polite to AI is a “just in case” scenario, very similar to practicing religious rituals out of habit and “just in case there is a judgment day someday”. (see picture below)

2. I’m a polite person – to all

This reason is often expressed as a default social behavior – being polite is a virtuous trait, and it should also extend to interacting with AI. It's also a way to teach the LLMs about appropriate social behavior in contrary to the violent material found online elsewhere.

3. The UI resembles a dialogue with a colleague

The current LLM’s user interfaces resemble chatting with a colleague, almost as if having a back-and-forth conversation. This triggers a natural inclination to engage with the AI in a human-like manner.

While these motivations are understandable, it is critical that we resist the urge to anthropomorphize language models.

But is there harm in being polite?

Absolutely not. (But there might.)

However, anthropo-morphizing LLMs beyond a basic courteous affect could lead to several concerning outcomes:

First, it may instill a false sense of reciprocity and trust in the capabilities of the system, leading users to overly rely on or over-attribute human-like qualities to the model. Language models, no matter how advanced, are ultimately statistical pattern matches trained on huge corpora of text data, and lack the deeper understanding, reasoning, and contextual intelligence that characterize human cognition. One discussant in one of my social media posts about this topic claimed, that women and children in the past were not considered as cognitive beings with the capability of converse complex topics. Why should we treat AI differently, then? This is a prime example of over-attributing human-like qualities to a technology.

Second, anthropomorphism could make users more vulnerable to manipulation, as they may be more inclined to trust and confide in the system. The ease with which LLMs can engage in conversational exchange may lead users to let their guard down and share sensitive information, or to be influenced by the system's responses in ways they would not with a clearly non-human agent.

This is why one key concern about AI is the potential for misuse and unintended consequences. Language models can be employed for both harmful and beneficial uses, and their impact may depend heavily on the context in which they are deployed. For example, a text-to-image model could be used with consent as part of an art piece, or without consent as a means of producing disinformation or harassment.

What does it mean to be AI-assisted humans?

The growing prevalence of conversational AI assistants, such as ChatGPT, has led to a concerning trend where individuals often fail to recognize the underlying nature of these systems (Kasneci et al., 2023). Rather than acknowledging the technological underpinnings, users may develop a false sense of trust and over-reliance, believing that they are interacting with a human-like entity. This risks obscuring the fundamental differences between human communication and the output of language models, potentially leading to a breakdown in the clear understanding of the limitations and capabilities of these AI systems.

To mitigate the risks of anthropomorphism, it is essential for developers and users to maintain a clear and transparent understanding of the nature of language models. This raises a few questions:

Why do we need a dialogue UI with LLM’s?

Why do we need to make them seem more human-like?

When prompting skills become more prevalent, are social courties embedded in the algorithm in a way, that they are necessary for a quality output?

There is also a fundamental philosophical and ethical question at the heart of this issue: to what extent should we treat these systems as possessing human-like attributes, such as agency, autonomy, and even consciousness? Scifi literature contains many cautionary (and also utopian) tales about the dangers of treating AI as human-like, from Isaac Asimov's Robot stories to the Terminator franchise (which seems to be most often mentioned in the sarcastic response “I’m polite just in case [there will be Skynet]”).

These questions have far-reaching implications for the development and deployment of AI-powered language models. Given the complexity and potential impact of these systems, designers, product owners and software developers should be mindful of the unintended implications of their choices when creating AI-based applications. We are already lacking a full process of applying ANY ethical considerations in product design, which is the reason we are quite in a hurry to implement an ethical AI development process.

Some ideas on how to consider anthropomorphism and AI design

Humans tend to anthropomorphize technology and things. It's a universal part of the human experience. We name our cars and our Roombas, we go “aaawww” at delivery robots (or sometimes lash out at them). To address these risks, a multifaceted approach is needed that involves technical, ethical, and cultural considerations.

Emphasize the artificiality of the system.

This can be achieved through visual design choices, such as using non-human avatars or interfaces that clearly distinguish the AI from a human user. For example, using distinct color schemes for the AI's responses or employing robotic voice synthesizers can serve as constant reminders that the interaction is not with a human. However, as we have seen recently in many popular applications, AI-based features are seeping into existing software and applications, where they become almost indistinguishable from human-created content.

2. Be transparent about the capabilities and limitations, AND educate users.

Critically examine different scenarios of their decisions in deploying new features. This can help set realistic expectations and avoid the pitfalls of anthropomorphization. (Feldman et al., 2019) While AI may never fully explain their reasoning, providing insights into the data sources or decision-making processes can help users understand the limitations and avoid attributing human-like qualities. Imagine a chatbot that, after answering a question, provides a brief explanation like "My response is based on analyzing patterns in a large dataset of text and code" – this transparency can ground the user's understanding. Education can involve incorporating disclaimers within the interface, providing tutorials, or even gamifying the learning process to foster a more informed and discerning user base.

3. Ethical guidelines and regulations.

These should be developed to ensure that AI-powered language models are designed and deployed responsibly. We need more public discussion within – and outside – the industry to shape shared norms, best practices, and comprehensive frameworks that can guide the ethical and safe development of these technologies. Does your company invest time and resources for you and your team to consider this?By implementing these design strategies, we can encourage a more balanced and realistic perception of AI systems, fostering a future where humans and AI can collaborate effectively without the pitfalls of misplaced anthropomorphism.

Remember: just because we can, we shouldn’t.

Sources:

Anderljung, Markus, Joslyn Barnhart, Jade Leung, Anton Korinek, Cullen O'Keefe, Jess Whittlestone, Shahar Avin, Miles Brundage, Justin B. Bullock, Duncan Cass-Beggs, Ben Chang, Tantum Collins, Tim Fist, Gillian K. Hadfield, Alan Hayes, L. Lawrence Ho, Sara Hooker, Eric Horvitz, Noam Kolt, Jonas Schuett, Yonadav Shavit, Divya Siddarth, Robert F. Trager, and Kai-Dietrich Wolf. 2023. "Frontier AI Regulation: Managing Emerging Risks to Public Safety" Cornell University. https://doi.org/10.48550/arxiv.2307.03718.

Harrer, Stefan. 2023. "Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine" Elsevier BV 90 : 104512-104512. https://doi.org/10.1016/j.ebiom.2023.104512.

Hosseini, Mohammad, David B. Resnik, and Kristi Holmes. 2023. "The ethics of disclosing the use of artificial intelligence tools in writing scholarly manuscripts" SAGE Publishing 19 (4) : 449-465. https://doi.org/10.1177/17470161231180449.

Plant, E., Richard, Mario Valerio Giuffrida, and Dimitra Gkatzia. 2022. "You Are What You Write: Preserving Privacy in the Era of Large Language Models" Cornell University. https://doi.org/10.48550/arxiv.2204.09391.

Sisón, G., José, Alejo, Marco Tulio Daza, Roberto Gozalo-Brizuela, and Eduardo C. Garrido-Merchán. 2023. "ChatGPT: More than a Weapon of Mass Deception, Ethical challenges and responses from the Human-Centered Artificial Intelligence (HCAI) perspective" Cornell University. https://doi.org/10.48550/arxiv.2304.11215.

Xuhui Zhou, Zhe Su, Maarten Sap. 2024. "Is this the real life? Is this just fantasy? The Misleading Success of Simulating Social Interactions With LLMs." 10.48550/arXiv.2403.05020

I quite didn't understand why antropomophising AI is a problem. Is it about the risk of trusting it's capabilities beyond what the product is designed to do? And therefore people are mislead which can lead to...? It's good to be aware of the limitations. When Claude is hallucinating, I get reminded that it's just another technical assistant tool, however much better, than what we had three years ago.

I think there is a huge step between acting politely when using technology (e.g. saying please) and actually believing it's a conscious being worthy of moral concern. Another concern could of course be: if you value having true beliefs, then antropomorphising something which isn't conscious is bad as it potentially deceives naïve people.

On the other hand LLMs have occasionally been better discussion partners for me than humans have for solving personal problems. And compared to therapy, a lot cheaper. (That statement naturally comes with a lot of caveats like risks with relying on LLMs for serious mental health problems. But for me it was helpful when my friends' and family's abilities to help me in my situation weren't enough, but it wasn't that big of an issue that it had to be a specialised professional.)

ChatGPT and other LLMs being designed to match human interaction makes a lot of sense to me in making the products user friendlier. If I can give instructions like I'd give them to another person, I don't have to learn a new way of talking, and that makes the product a lot more accessible. Whether they need to have human-like names and faces is easier to debate. But I think more kindness and empathy even when directed towards something nonliving can't be too bad in the world we live in.